Navigating Overfitting: Understanding and Implementing Regularization Techniques in Data Science

Stay Informed With Our Weekly Newsletter

Receive crucial updates on the ever-evolving landscape of technology and innovation.

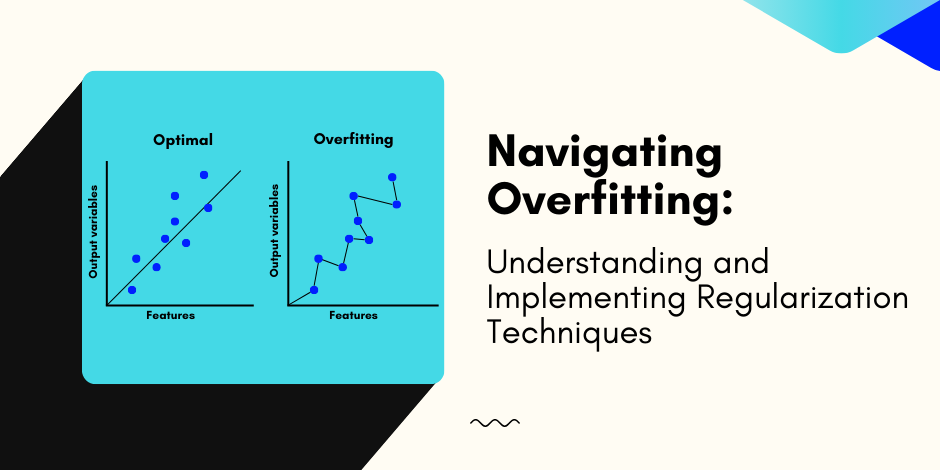

Overfitting can be a common issue with machine learning (ML) models.

When a model is overfitted, it performs on the training data but fails to generalize to new, unseen data.

This can result in poor performance and inaccurate predictions.

In this article, we will explore the concept of overfitting, its impact on models, and how to address it using regularization techniques in data science.

Understanding the concept of overfitting

Overfitting happens when a model becomes too complex and starts to memorize the noise and randomness in the training data.

As a result, it fits the training data too closely, leading to poor performance on unseen data.

Let’s delve into the basics of overfitting.

When a model overfits, it learns the training data so well that it loses its ability to generalize to new, unseen data.

This phenomenon is akin to a student memorizing the answers to specific exam questions without truly understanding the underlying concepts.

Just as the student would struggle with new questions that require knowledge application, an overfitted model falters when faced with data it has yet to see.

The basics of overfitting

Overfitting happens when the model fits the training data with such precision that it captures the noise and randomness in the data.

Over-optimizing the model to the training data can lead to better generalization and accurate predictions of new data.

The impact of overfitting on ML models

Overfitting can have severe repercussions on ML models.

It reduces the model’s ability to generalize and make accurate predictions on unseen data.

While the model may perform well on the training data, it fails to perform effectively in real-world scenarios, rendering it useless.

Identifying signs of overfitting in your model

Several common signs indicate overfitting in a model.

These signs include a significant difference in performance between training and validation data, high accuracy on training data but low accuracy on test data, and unstable performance when the training data changes.

An introduction to regularization techniques in data science

Regularization techniques in data science are used to mitigate the problem of overfitting in ML models.

They introduce a penalty term to the model’s objective function, discouraging it from becoming too complex.

Let’s delve into the role of regularization in combating overfitting.

Regularization techniques in data science are fundamental to ML and essential for building robust and generalizable models.

It plays a crucial role in addressing the common issue of overfitting, where a model performs well on training data but needs to generalize to unseen data.

ML practitioners can balance model complexity and performance by incorporating regularization techniques in data science.

The role of regularization in combating overfitting

The primary purpose of regularization techniques in data science is to prevent overfitting by adding a penalty to the model’s complexity.

By doing so, regularization encourages simpler models that are less prone to overfitting.

Regularization techniques in data science act as a restraint, balancing model complexity and generalization ability.

Moreover, regularization techniques not only help in preventing overfitting but also aid in improving model interpretability.

Regularization can enhance the transparency of models by promoting simpler models with fewer features, making it easier to understand the underlying relationships captured by the data.

Different types of regularization techniques

Different regularization techniques in data science are available, each with its approach to combat overfitting.

Some common types include L1 regularization (Lasso), L2 regularization (Ridge regression), and ElasticNet, which combines L1 and L2 regularization.

Each type of regularization technique has strengths and weaknesses, making it crucial for data scientists to choose the most appropriate method based on the dataset’s characteristics and the model’s specific goals.

Experimenting with different regularization techniques in data science can help fine-tune the model’s performance and achieve optimal results.

The mathematics behind regularization

Regularization techniques in data science involve adding a penalty term to the model’s objective function.

This penalty term depends on the regularization technique and imposes constraints on the model’s coefficients.

By adjusting the penalty term’s strength, we can control the trade-off between model complexity and the level of penalty imposed.

Understanding the mathematical principles behind regularization is essential for grasping its impact on model training and performance.

It provides insights into how regularization influences the model’s behavior and helps make informed decisions when implementing regularization in machine learning projects.

Implementing regularization techniques

Now that we understand the basics of regularization techniques in data science let’s explore how to implement these techniques in practice.

Preparing your data for regularization

It is crucial to preprocess and prepare your data appropriately before applying regularization.

This includes handling missing values, scaling numerical features, and encoding categorical variables.

Applying regularization to a machine learning model

To apply regularization, modify the model’s objective function to include the penalty term.

You can do this by adjusting the hyperparameters of your chosen regularization technique, such as the regularization parameter in L1 or L2 regularization.

Evaluating the effectiveness of regularization

After implementing regularization, it is essential to evaluate its effectiveness.

This involves assessing the model’s performance on the training and test datasets and comparing it to the performance without regularization.

Various evaluation metrics, such as accuracy, precision, and recall, can be used to measure the model’s success.

Overcoming challenges in regularization

While regularization techniques in data science are powerful for combating overfitting, it comes with challenges.

Let’s explore some of the common obstacles faced when implementing regularization.

Dealing with high-dimensional data

In reality, many datasets have many features, leading to high-dimensional data.

This poses a challenge in regularization as it becomes harder to determine which features are essential for the model.

Feature selection and dimensionality reduction techniques can be employed to address this challenge.

Addressing bias-variance trade-off

Regularization helps find the right balance between the model’s bias and variance.

However, striking this balance can be challenging.

A model with high bias may underfit the data, while a model with high variance may overfit the data.

It is crucial to experiment and fine-tune the regularization parameters to achieve an optimal bias-variance trade-off.

Optimizing regularization parameters

Regularization techniques often come with hyperparameters that need to be optimized.

The choice of these parameters can significantly impact the model’s performance.

Cross-validation can be employed to find your model’s optimal regularization parameters.

Conclusion

Overfitting is a common challenge in ML models.

Regularization techniques are powerful tools for tackling overfitting.

They add a penalty term to the model’s objective function.

By understanding the basics of overfitting, the role and types of regularization, and how to implement it effectively, we can navigate overfitting and build more robust ML models.

Want to learn more about how to level up in data science?

As your learning partner, the Institute of Data’s Data Science & AI program equips you with industry-reputable accreditation in this sought-after arena in tech.

We’ll prepare you with the support, resources, and cutting-edge programs needed to create a successful career.

Ready to learn more about our programs? Contact our local team for a free career consultation.